- #Download spark java jar how to#

- #Download spark java jar install#

- #Download spark java jar software#

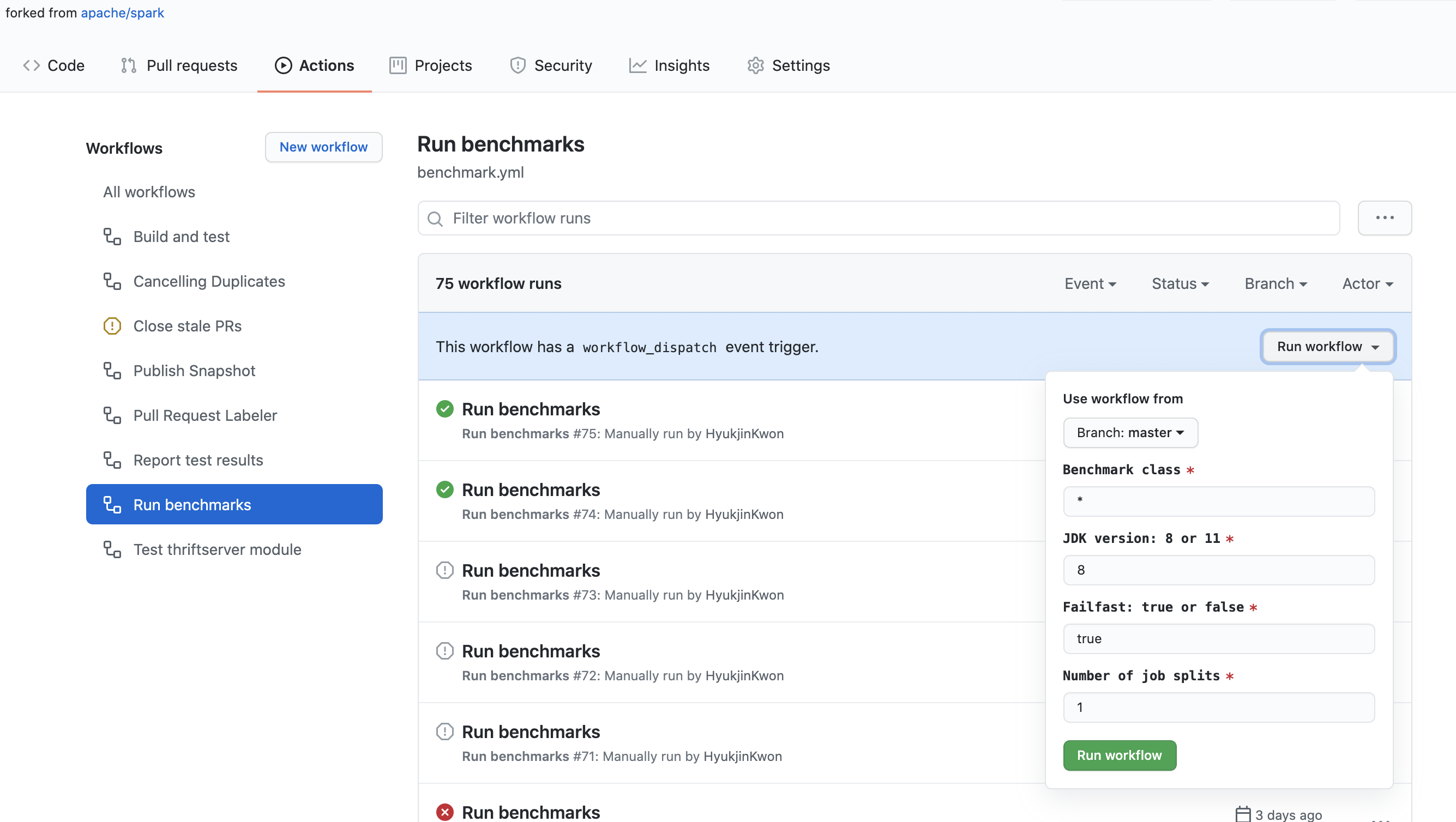

# pipeline = PretrainedPipeline('explain_document_dl', lang='en') setOutputCol ( "pos" ) # example for pipelines # you download this model, extract it, and use. # french_pos = PerceptronModel.pretrained("pos_ud_gsd", lang="fr") # instead of using pretrained() for online: pretrained() function to download pretrained models, you will need to manually download your pipeline/model from Models Hub, extract it, and load it.Įxample of SparkSession with Fat JAR to have Spark NLP offline: Instead of using PretrainedPipeline for pretrained pipelines or the.Instead of using the Maven package, you need to load our Fat JAR.If you are behind a proxy or a firewall with no access to the Maven repository (to download packages) or/and no access to S3 (to automatically download models and pipelines), you can simply follow the instructions to have Spark NLP without any limitations offline: Spark NLP library and all the pre-trained models/pipelines can be used entirely offline with no access to the Internet.

#Download spark java jar install#

C:\Users\maz>%HADOOP_HOME%\bin\winutils.exe chmod 777 /tmp/Įither create a conda env for python 3.6, install pyspark=3.1.2 spark-nlp numpy and use Jupyter/python console, or in the same conda env you can go to spark bin for pyspark –packages :spark-nlp_2.12:3.4.1.C:\Users\maz>%HADOOP_HOME%\bin\winutils.exe chmod 777 /tmp/hive.Set Paths for %HADOOP_HOME%\bin and %SPARK_HOME%\bin Set the env for HADOOP_HOME to C:\hadoop and SPARK_HOME to C:\spark

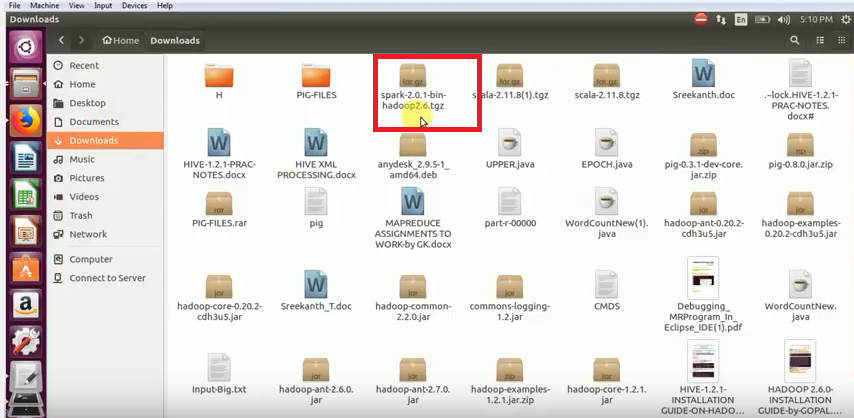

During installation after changing the path, select setting Pathĭownload winutils and put it in C:\hadoop\bin ĭownload Apache Spark 3.1.2 and extract it in C:\spark.Make sure you install it in the root C:\java Windows.

#Download spark java jar how to#

How to correctly install Spark NLP on Windows 8 and 10 In order to fully take advantage of Spark NLP on Windows (8 or 10), you need to setup/install Apache Spark, Apache Hadoop, and Java correctly by following the following instructions:

#Download spark java jar software#

Sudo python3 -m pip install awscli boto spark-nlpĪ sample of your software configuration in JSON on S3 (must be public access): [ To lanuch EMR cluster with Apache Spark/PySpark and Spark NLP correctly you need to have bootstrap and software configuration.Ī sample of your bootstrap script #!/bin/bashĮcho -e 'export PYSPARK_PYTHON=/usr/bin/python3Įxport SPARK_JARS_DIR=/usr/lib/spark/jarsĮxport SPARK_HOME=/usr/lib/spark' > $HOME/.bashrc & source $HOME/.bashrc

If you are interested, there is a simple SBT project for Spark NLP to guide you on how to use it in your projects Spark NLP SBT Starter Spark-nlp on Apache Spark 3.0.x and 3.1.x: //

0 kommentar(er)

0 kommentar(er)